What is OCR?

Optical character recognition or optical character reader (OCR) is the electronic or mechanical conversion of images of typed, handwritten, or printed text into machine-encoded text, whether from a scanned document, a photo of a document, a scene-photo (for example the text on signs and billboards in a landscape photo) or from subtitle text superimposed on an image (for example: from a television broadcast).

The Vision framework allows the users to apply computer vision algorithms to perform a variety of tasks on input images and video.

What to expect?

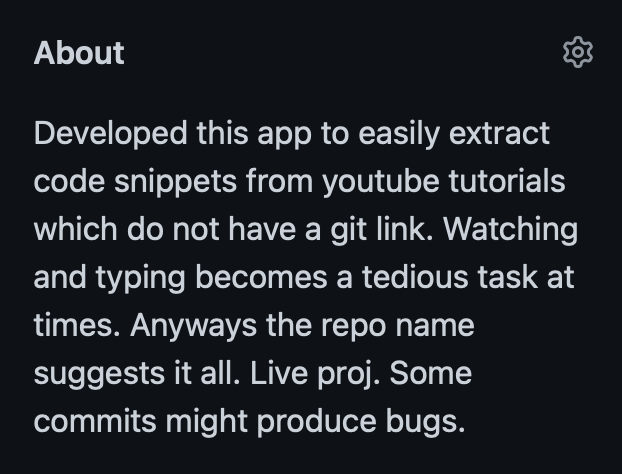

Output: About Developed this app to easily extract code snippets from youtube tutorials which do not have a git link. Watching and typing becomes a tedious task at times. Anyways the repo name suggests it all. Live proj. Some commits might produce bugs.

Code block

Import Vision framework.

import vision

Add access to camera/photos so the user can click/select images for character recognition. Make sure to add the necessary permissions in info.plist

@IBAction func didTapCamera(){

presentPhotoActionSheet()

}

extension ViewController: UIImagePickerControllerDelegate, UINavigationControllerDelegate{

func presentPhotoActionSheet() {

let actionSheet = UIAlertController(title: NSLocalizedString("Profile Picture", comment: "") ,

message: NSLocalizedString("How would you like to select a picture?", comment: ""),

preferredStyle: .actionSheet)

actionSheet.addAction(UIAlertAction(title: NSLocalizedString("Cancel", comment: ""),

style: .cancel,

handler: nil))

actionSheet.addAction(UIAlertAction(title: NSLocalizedString("Take Photo", comment: ""),

style: .default,

handler: { [weak self] _ in

self?.presentCamera()

}))

actionSheet.addAction(UIAlertAction(title: NSLocalizedString("Chose Photo", comment: ""),

style: .default,

handler: { [weak self] _ in

self?.presentPhotoPicker()

}))

present(actionSheet, animated: true)

}

func presentCamera() {

let vc = UIImagePickerController()

vc.sourceType = .camera

vc.delegate = self

vc.allowsEditing = true

present(vc, animated: true)

}

func presentPhotoPicker() {

let vc = UIImagePickerController()

vc.sourceType = .photoLibrary

vc.delegate = self

vc.allowsEditing = true

present(vc, animated: true)

}

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [UIImagePickerController.InfoKey : Any]) {

picker.dismiss(animated: true, completion: nil)

guard let selectedImage = info[UIImagePickerController.InfoKey.editedImage] as? UIImage else {

return

}

act.isHidden = false

act.startAnimating()

self.image.image = selectedImage

recognizeText(image: selectedImage)

}

func imagePickerControllerDidCancel(_ picker: UIImagePickerController) {

picker.dismiss(animated: true, completion: nil)

}

}

Add function to recognize text from selected image.

private func recognizeText(image: UIImage?){

var textString = ""

request = VNRecognizeTextRequest(completionHandler: {(request, error) in

guard let observations = request.results as? [VNRecognizedTextObservation] else {

return

}

for observation in observations {

guard let topCandidate = observation.topCandidates(1).first else {

print("duh")

continue

}

textString += "\n\(topCandidate.string)"

DispatchQueue.main.async {

self.act.isHidden = true

self.act.stopAnimating()

self.label.text = textString

}

}

})

request.recognitionLevel = .accurate

request.usesLanguageCorrection = true

let requests = [request]

DispatchQueue.global(qos: .userInitiated).async {

guard let img = image?.cgImage else {

print("Nay")

return

}

let handle = VNImageRequestHandler(cgImage: img, options: [:])

try?handle.perform(requests)

}

}

Support or Contact

Drop me a mail and we’ll sort it out.